Significance

Keypoints

- Propose QA-GNN which incorporates knowledge graph information for question answering with structured reasoning

- Demonstrate qualitative and quantitative performance of the QA-GNN

Review

Background

Self-supervised training of large language models have been successful in learning the representation of text data.

However, the language models still have room for improvement when it comes to question answering problem which requires structured reasoning.

On the other hand, knowledge graphs are known to be suited for solving tasks that require structured reasoning.

This paper proposes QA-GNN, which incorporates knowledge graph representation extracted with a Graph neural network (specifically, the Graph attention network (GAT)) to the question answering problem.

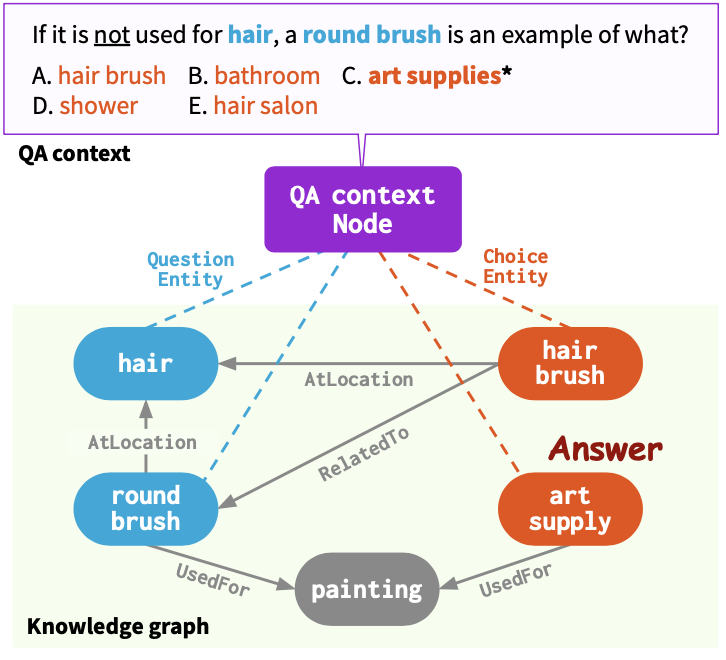

The QA-GNN differs from previous attempts that try to exploit knowledge graphs for question answering in that the question and the answer text representation are directly considered as an entity (node) in the knowledge graph.

Question and answer are considered to be nodes of the knowledge graph

This reminded me of the extended knowledge graph for purchase behavior prediction in this post.

Could directly linking knowledge graphs to the representation of a specific task of interest be a general solution to real-world problems?

Question and answer are considered to be nodes of the knowledge graph

This reminded me of the extended knowledge graph for purchase behavior prediction in this post.

Could directly linking knowledge graphs to the representation of a specific task of interest be a general solution to real-world problems?

Keypoints

Propose QA-GNN which incorporates knowledge graph information for question answering with structured reasoning

The QA-GNN framework

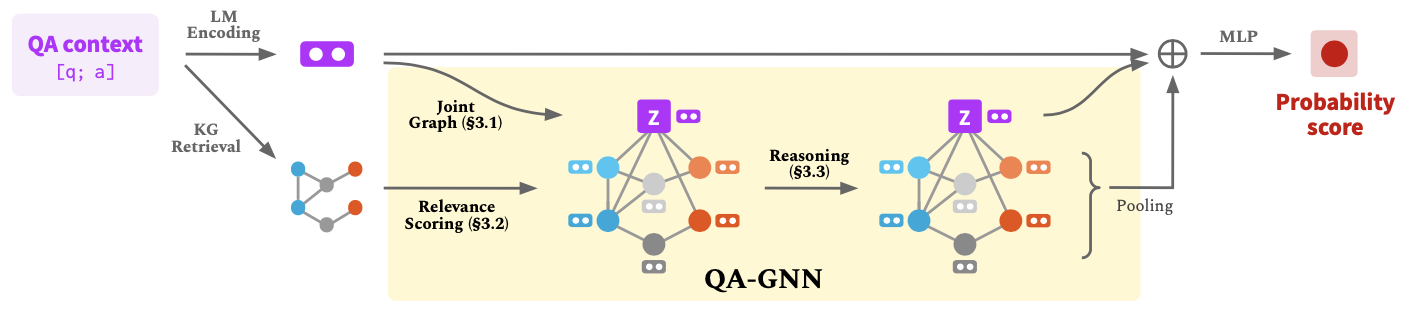

Schematic illustration of the QA-GNN is provided first, so you can come back to this figure while reading the following details of the method.

As mentioned in the background section, the question and answer text representation are considered as an entity of the knowledge graph.

Practically, the question text and the answer text are linked with their related entities of the knowledge graph by the method from this paper.

The joint graph, or the working graph, is a subgraph of knowledge graph with question and answer nodes, where k-hop neighboring nodes within the path of between the question and the answer are included.

The concatenated question and answer text representation $z$ is also considered as a separate node of the working graph with connection to the question and answer nodes.

The node embeddings of the working graph are obtained by the pre-processing method described in this paper.

Now the question and answers are equipped with related knowledges as a graph structure, but the authors propose to take another step before putting the graph in to a GNN.

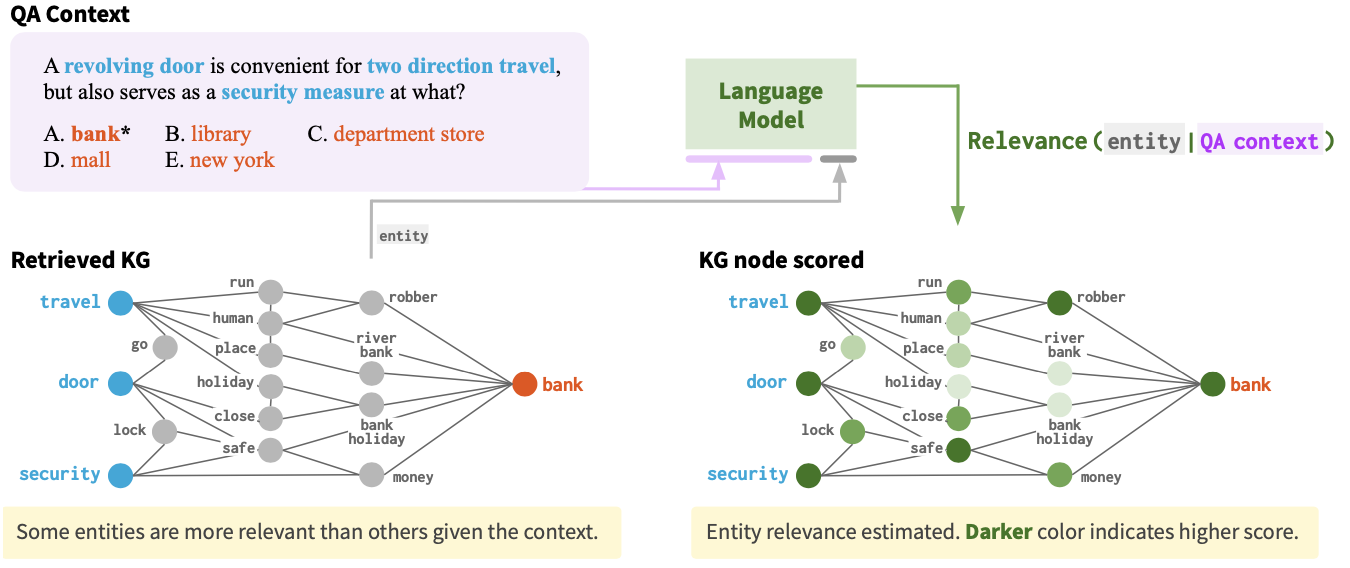

Since the working graph may contain many irrelevant knowledge nodes and relations, the relevance scoring is performed on the nodes of the working graph.

This score is computed with a pre-trained language model by putting in text corresponding to each node concatenated with the question-answer text.

Every node of the working is scaled with the computed relevance score, except $z$.

The QA-GNN framework

Schematic illustration of the QA-GNN is provided first, so you can come back to this figure while reading the following details of the method.

As mentioned in the background section, the question and answer text representation are considered as an entity of the knowledge graph.

Practically, the question text and the answer text are linked with their related entities of the knowledge graph by the method from this paper.

The joint graph, or the working graph, is a subgraph of knowledge graph with question and answer nodes, where k-hop neighboring nodes within the path of between the question and the answer are included.

The concatenated question and answer text representation $z$ is also considered as a separate node of the working graph with connection to the question and answer nodes.

The node embeddings of the working graph are obtained by the pre-processing method described in this paper.

Now the question and answers are equipped with related knowledges as a graph structure, but the authors propose to take another step before putting the graph in to a GNN.

Since the working graph may contain many irrelevant knowledge nodes and relations, the relevance scoring is performed on the nodes of the working graph.

This score is computed with a pre-trained language model by putting in text corresponding to each node concatenated with the question-answer text.

Every node of the working is scaled with the computed relevance score, except $z$.

Relevance scoring with a language model

Relevance scoring with a language model

We are really ready to GAT now. The reasoning with GAT is performed on the knowledge graph with question and answer relationship, making QA-GNN more powerful than other methods.

Demonstrate qualitative and quantitative performance of the QA-GNN

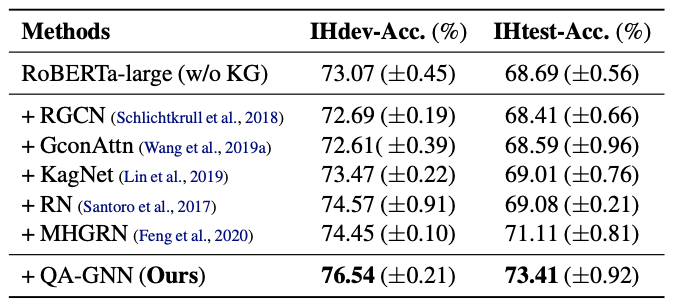

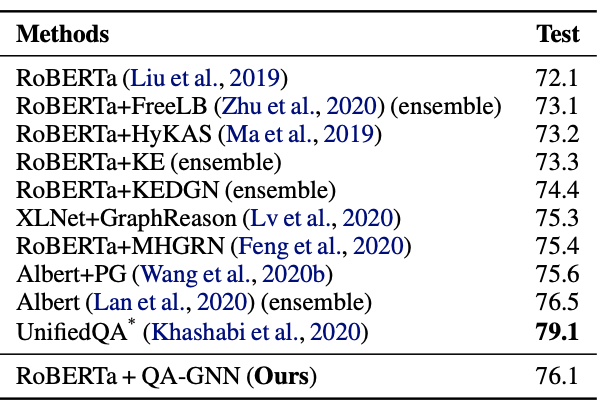

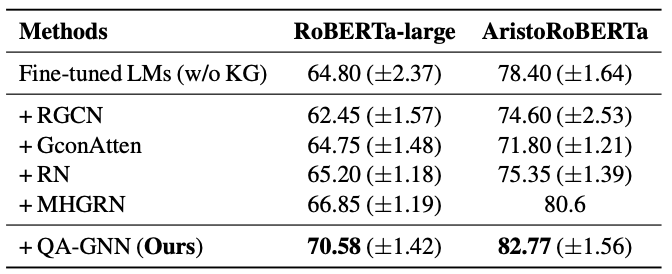

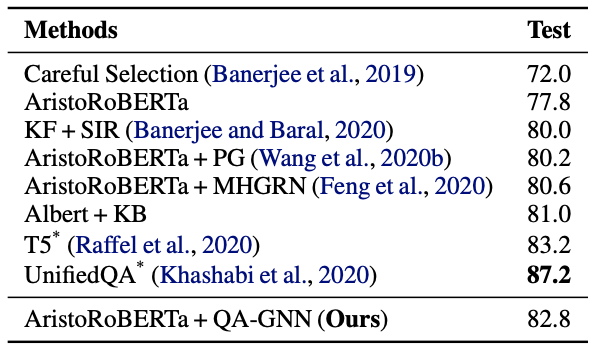

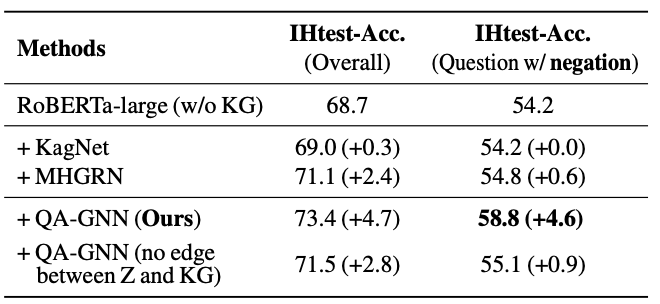

The performance was evaluated on the CommonsenseQA and the OpenBookQA. The ConceptNet is used for the knowledge graph of the QA-GNN. For the language models, RoBERTa or AristoRoBERTa were used. Comparison was made with the baselines including KagNet and the MHGRN.

Quantitatively, the QA-GNN outperformed other baseline methods in the CommonsenseQA dataset (in-house split) and the OpenBookQA.

Performance comparison with baselines on CommonsenseQA (in-house split)

Performance comparison with baselines on CommonsenseQA (in-house split)

Performance comparison with baselines on CommonsenseQA (official leaderboard)

Performance comparison with baselines on CommonsenseQA (official leaderboard)

Performance comparison with baselines on OpenBookQA (in-house split)

Performance comparison with baselines on OpenBookQA (in-house split)

Performance comparison with baselines on OpenBookQA (official leaderboard)

The authors mention that models which showed higher test accuracy in the official leaderboard were 8~30$\times$ larger than the QA-GNN.

Performance comparison with baselines on OpenBookQA (official leaderboard)

The authors mention that models which showed higher test accuracy in the official leaderboard were 8~30$\times$ larger than the QA-GNN.

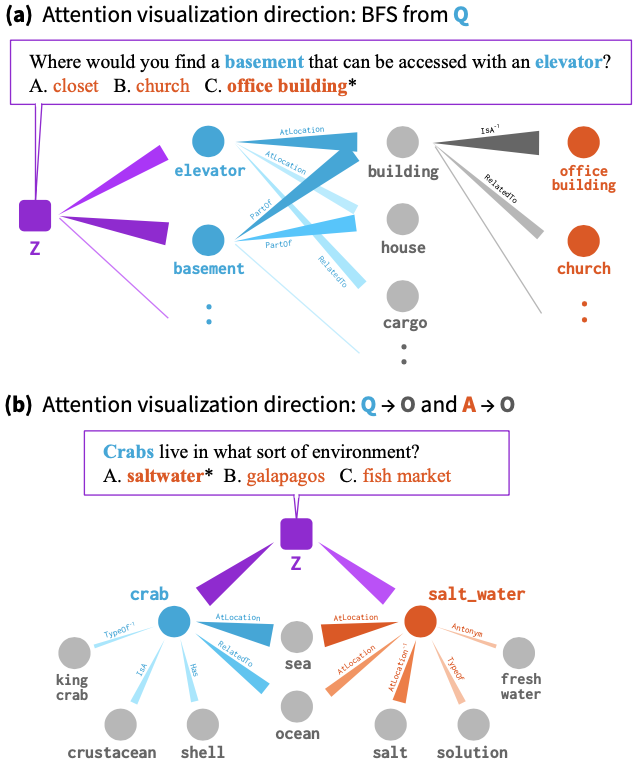

The authors further perform qualitative analysis on the reasoning process.

The reasoning process was visualized with the attention weights learned by the GAT between the connected nodes.

Attention weights of the GAT show reasoning process of the QA-GNN

Attention weights of the GAT show reasoning process of the QA-GNN

Also, quantitative and qualitative analysis of the structured reasoning is experimented with questions that include negation words from the CommonsenseQA dataset.

Structured reasoning (answering questions with negation words) performance over baseline

Structured reasoning (answering questions with negation words) performance over baseline

Further experiment results are referred to the original paper, which is worth reading.