Significance

Keypoints

- Propose a method for generating high definition maps with graph neural networks

- Demonstrate qualitative and quantitative performance of the proposed method

Review

Background

High definition maps refer to electronic maps with precise semantic information (traffic light, stop sign, etc) of the roads. HD maps are usually generated by acquisition of road data with sensor suites (LiDAR, radar, camera) followed by annotation of human specialists, which is prohibitively expensive. The authors aim to produce synthetic HD maps in a data-driven way.

Keypoints

Propose a method for generating high definition maps with graph neural networks

Hierarchical graph representation of HD maps

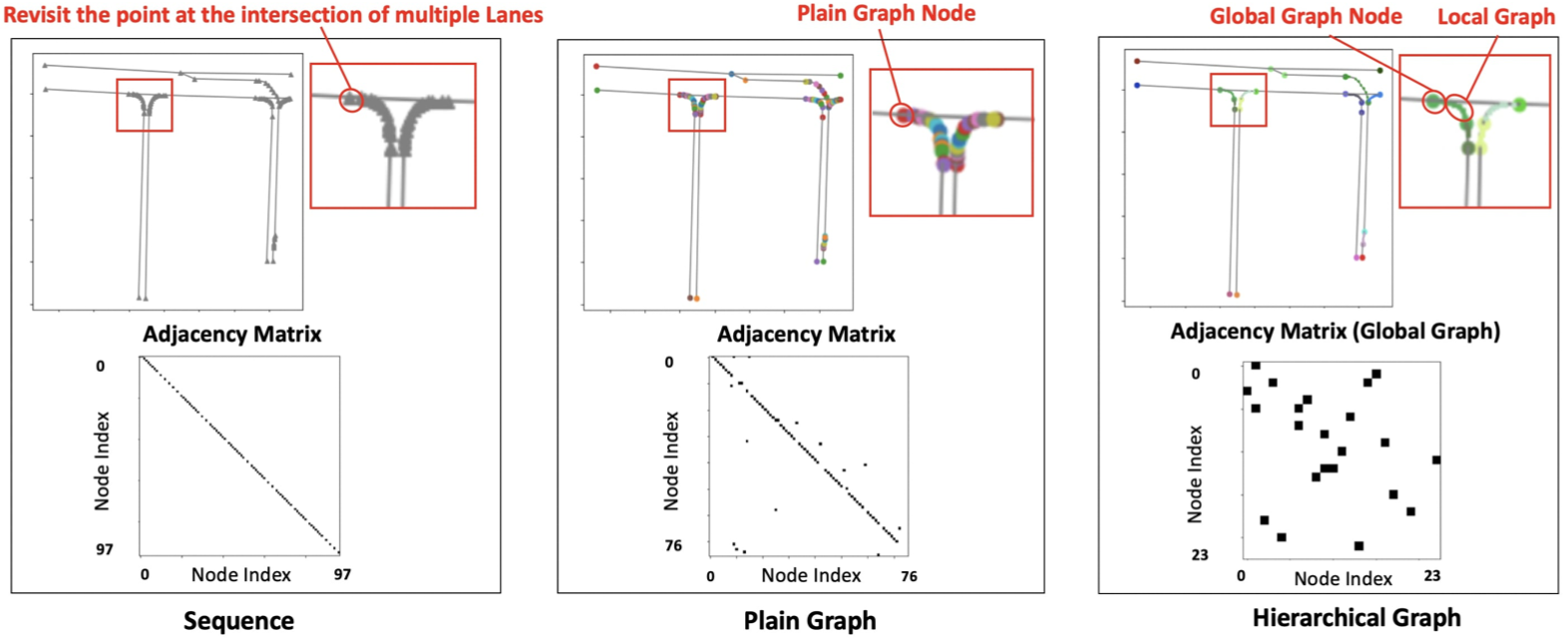

One of the key novelty of the proposed method HDMapGen is the hierarchical graph representation of HD maps.

Unlike the sequence representation, or a plain graph representation of the HD maps, hierarchical graph representation simplifies the graph architecture by distinguishing global and local graphs.

Hierarchical graph representation of HD maps (right)

Global graph consists of key points, which are endpoints or intersection points of lanes, and edges as the curve.

Curvature details of each lane are represented as a local graph which consists of control points and edges connecting these points.

Hierarchical graph representation of HD maps (right)

Global graph consists of key points, which are endpoints or intersection points of lanes, and edges as the curve.

Curvature details of each lane are represented as a local graph which consists of control points and edges connecting these points.

Autoregressive modeling

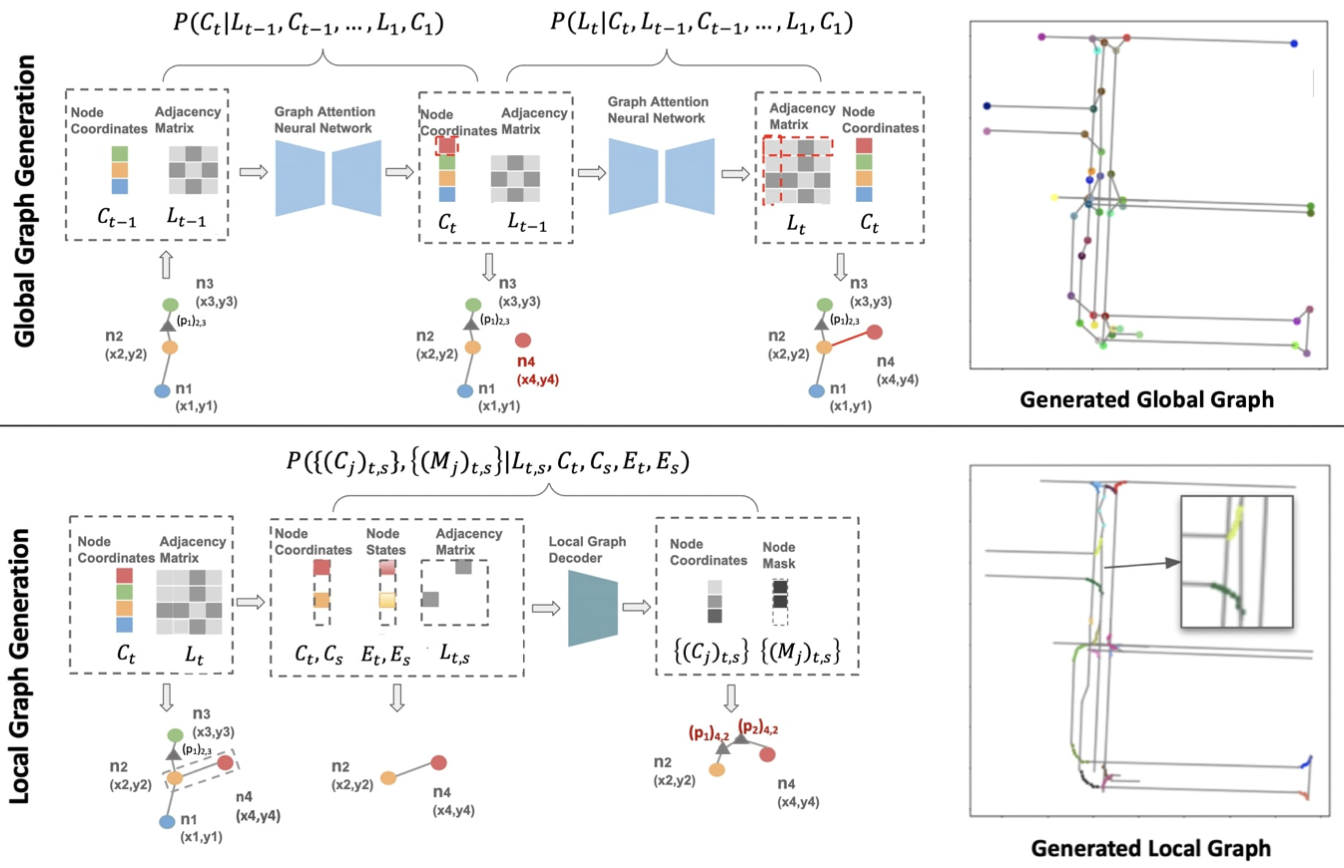

HDMapGen generates graph in an autoregressive manner.

Schematic illustration of the proposed method

Global graph is generated at time $t$ with previous graphs as prior

\begin{align}

P(C_{t} \mid L_{t-1},C_{t-1},…,L_{1},C_{1}), \\ P(L_{t} \mid C_{t},L_{t-1},C_{t-1},…,L_{1},C_{1}),

\end{align}

where $C_{t}$ and $L_{t}$ are node feature (C oordinate) and adjacency matrix (L ane) of the global graph at time $t$.

The above equation denotes a case of coordinate-first style, since the coordinate (node feature) is generated first, and the adjacency matrix is generated with the newly generated coordinate.

There can be topology-first, generating adjacency matrix first, or independent, generating coordinate and adjacency matrix at the same time.

The graph generation is learned with graph attention networks (GAT).

Local graph is then generated from the global graph coordinate/lanes with a multi-layer perceptron (MLP) local graph decoder.

Schematic illustration of the proposed method

Global graph is generated at time $t$ with previous graphs as prior

\begin{align}

P(C_{t} \mid L_{t-1},C_{t-1},…,L_{1},C_{1}), \\ P(L_{t} \mid C_{t},L_{t-1},C_{t-1},…,L_{1},C_{1}),

\end{align}

where $C_{t}$ and $L_{t}$ are node feature (C oordinate) and adjacency matrix (L ane) of the global graph at time $t$.

The above equation denotes a case of coordinate-first style, since the coordinate (node feature) is generated first, and the adjacency matrix is generated with the newly generated coordinate.

There can be topology-first, generating adjacency matrix first, or independent, generating coordinate and adjacency matrix at the same time.

The graph generation is learned with graph attention networks (GAT).

Local graph is then generated from the global graph coordinate/lanes with a multi-layer perceptron (MLP) local graph decoder.

Demonstrate qualitative and quantitative performance of the proposed method

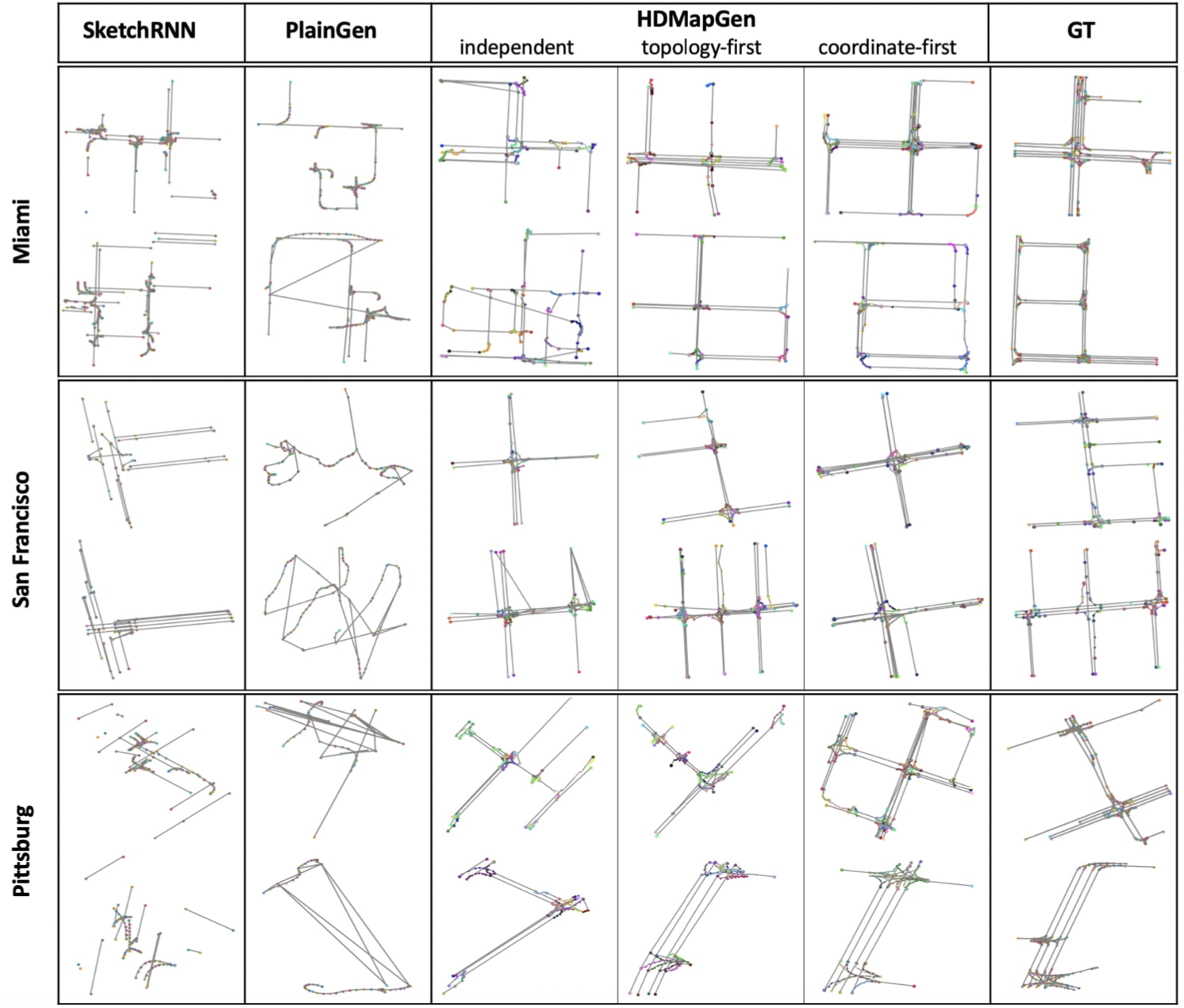

The performance of the proposed HDMapGen is compared with baseline approaches including SketchRNN (sequential case), and the PlainGen (non-hierarchical graph case modeled by global graph modeling method).

Qualitative result of the proposed method on the Argoverse dataset

It can be seen that the proposed HDMapGen generates more realistic HD maps with similar properties to the ground truth cities.

However, independent HDMapGen shows degraded generation performance, probably due to lack of modeling dependence between the coordinate and the topology.

Qualitative result of the proposed method on the Argoverse dataset

It can be seen that the proposed HDMapGen generates more realistic HD maps with similar properties to the ground truth cities.

However, independent HDMapGen shows degraded generation performance, probably due to lack of modeling dependence between the coordinate and the topology.

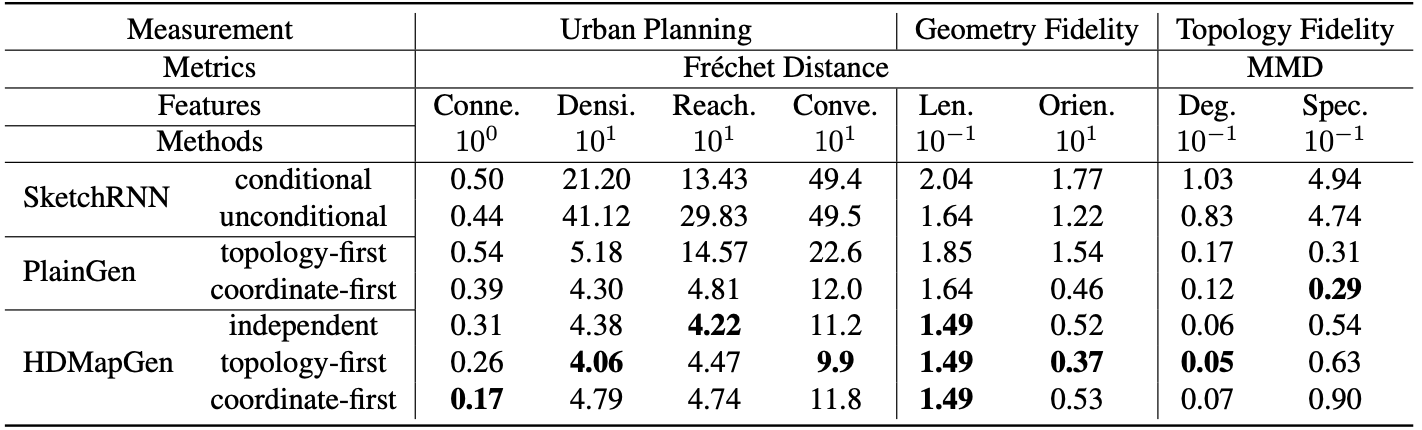

Quantiative performance of HDMapGen is evaluated by Fréchet distance and maximum mean discrepancy (MMD).

Quantitative performance of the proposed method on the Argoverse dataset

Quantitative performance of the proposed method on the Argoverse dataset

Scalability and latency (speed) analysis results are referred to the original paper.