Significance

Keypoints

- Propose multi-scale cascaded diffusion model for conditional image generation

- Demonstrate performance of the proposed method by experiments

Review

Background

Diffusion models are a family of generative models, recently achieving state-of-the-art results in image synthesis (see my previous post for a brief review). Diffusion models generate samples of target distribution by gradually denoising the random noise sampled from a known distribution. Benchmark diffusion models are capable of generating high fidelity images, but is slow. Attempts are being made to accelerate the sampling process of diffusion models (see my previous post). The authors proposes cascaded diffusion models, which gradually upscales the low-resolution image for generating high fidelity image with given condition. This multi-scale approach can accelerate the image synthesis process and achieves state-of-the-art result, outperforming BigGAN-deep and ADM on conditional image synthesis.

Keypoints

Propose multi-scale cascaded diffusion model for conditional image generation

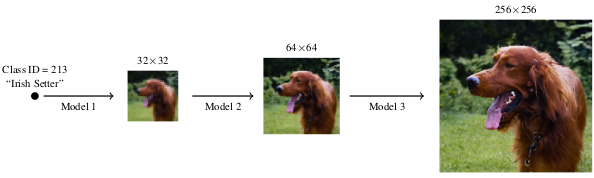

Scheme of cascaded generation

The cascaded diffusion model (CDM) is simply a sequential stack of diffusion models with upscaling operators (e.g. bilinear interpolation).

First diffusion model generates low resolution image from the class conditioned latent noise, while the following diffusion models generate high fidelity image from the low-resolution image.

The authors find that augmentation to the conditioning random variable (low-resolution image) can improve the final performance of the cascaded diffusion pipeline.

Blurring augmentation, which is to apply Gaussian blurring to the condition image, is empirically found to improve performance when applied to images with relatively higher resolution (128$\times$128 or 256$\times$256).

Another form of conditioning augmentation is the truncated conditioning augmentation, which is to stop the low-resolution reverse process at earlier timestep.

It can be seen from the experiments that the simple diffusion model with cascading and conditioning augmentation outperforms other baseline methods without using other tricks (such as classifier guidance).

Scheme of cascaded generation

The cascaded diffusion model (CDM) is simply a sequential stack of diffusion models with upscaling operators (e.g. bilinear interpolation).

First diffusion model generates low resolution image from the class conditioned latent noise, while the following diffusion models generate high fidelity image from the low-resolution image.

The authors find that augmentation to the conditioning random variable (low-resolution image) can improve the final performance of the cascaded diffusion pipeline.

Blurring augmentation, which is to apply Gaussian blurring to the condition image, is empirically found to improve performance when applied to images with relatively higher resolution (128$\times$128 or 256$\times$256).

Another form of conditioning augmentation is the truncated conditioning augmentation, which is to stop the low-resolution reverse process at earlier timestep.

It can be seen from the experiments that the simple diffusion model with cascading and conditioning augmentation outperforms other baseline methods without using other tricks (such as classifier guidance).

Demonstrate performance of the proposed method by experiments

Main experimental result of the paper is the conditional image generation experiment on the ImageNet dataset.

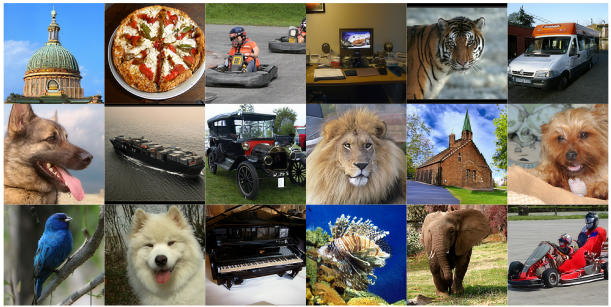

Exemplar images conditionally generated with CDM

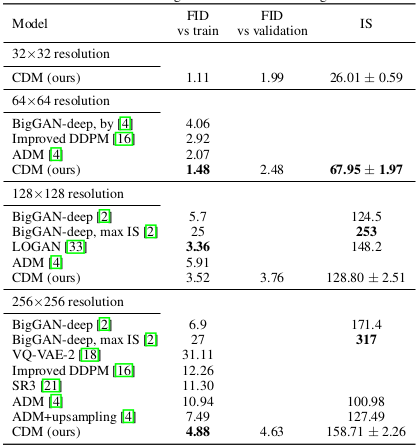

The CDM outperforms other state-of-the-art GANs and diffusion models in terms of the FID score for the conditional image generation task.

Exemplar images conditionally generated with CDM

The CDM outperforms other state-of-the-art GANs and diffusion models in terms of the FID score for the conditional image generation task.

Performance of the proposed method for conditional image generation on ImageNet dataset

Performance of the proposed method for conditional image generation on ImageNet dataset

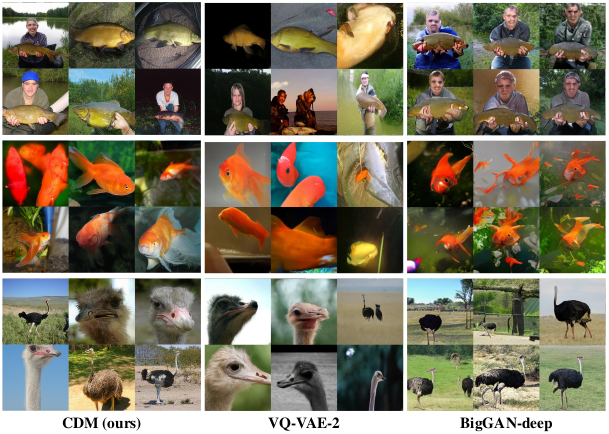

Qualitative comparison of the proposed method with baseline models

Qualitative comparison of the proposed method with baseline models

Experimental analysis on various resolutions and ablation studies are referred to the original paper. The review may be short, but the experimental results are quite encouraging in that diffusion models beat GANs on (conditional) image synthesis, again.

Related

- A Picture is Worth a Thousand Words: Principled Recaptioning Improves Image Generation

- Collaborative Score Distillation for Consistent Visual Synthesis

- Tackling the Generative Learning Trilemma with Denoising Diffusion GANs

- Palette: Image-to-Image Diffusion Models

- Image Synthesis and Editing with Stochastic Differential Equations