Significance

Recaptioning images with high-quality samples improve the text-to-image generation

Review

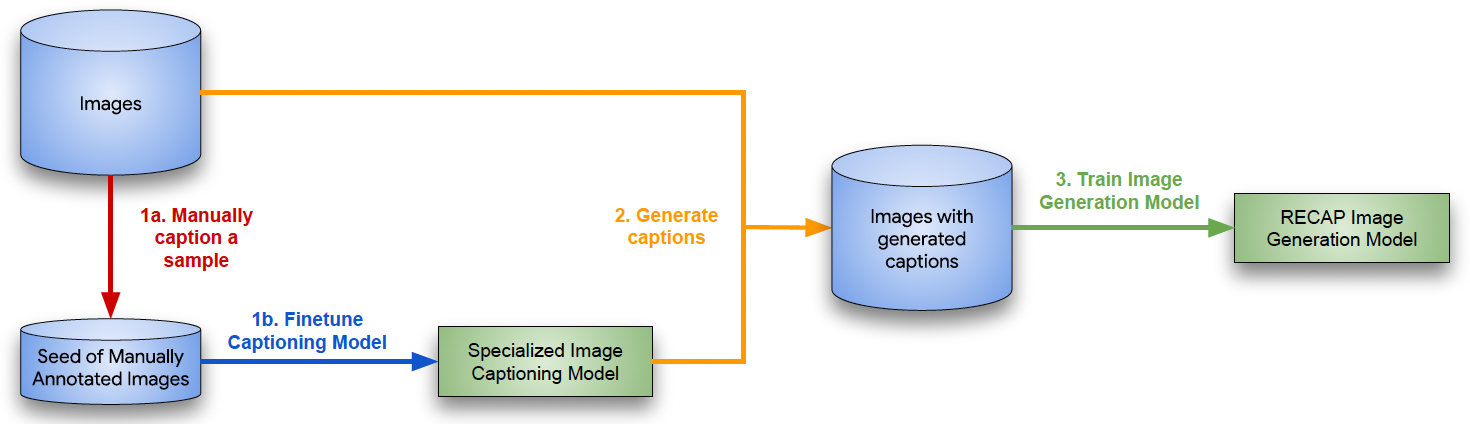

The authors propose RECAP, which addresses the issue that most text-to-image models are trained on a web-crawled dataset with low-quality (image, text) pair. They relabel the images with an image-to-text model trained on the web-crawled dataset along with a smaller set of high-quality manually annotated images. This improves the quality of the caption that can be generated from the image-to-text model, so that a new high-quality (image, text) pair can be recaptioned with the original web-crawled images.

The RECAP

The RECAP

The text-to-image model trained with this recaptioned image shows improved generation performance in various experiments.