Significance

Keypoints

- Propose a method for domain adaptation in object detection tasks

- Demonstrate performance of the proposed method by experiments

Review

Background

Deep neural networks often fail to generalize to unseen data distribution during training. For example, object detection models trained on an self-driving scene may fail to work well on a retail checkout object detection task. This domain shift between the train and test data is often a big limitation in practical model deployment, since the model may not be as robust as we expect. This work addressed this issue and propose simple methods to adapt an object detection model to an unlabeled test dataset with domain shift. The key idea is to generate a clean and accurate pseudo-labels for the unlabeled test dataset, and enable tight supervision for the model to be deployed on both the train and test data.

Keypoints

Propose a method for domain adaptation in object detection tasks

The two key ideas of this work are the DomainMix augmentation and the gradual adaptation.

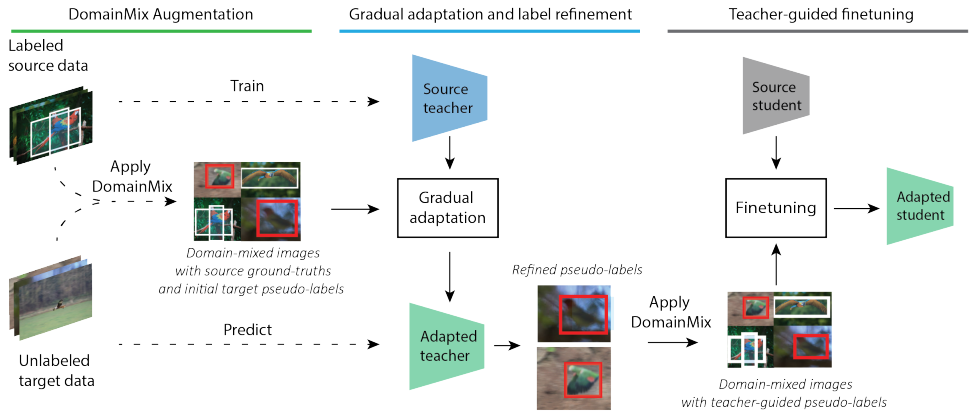

Schematic illustration of the proposed method

Schematic illustration of the proposed method

Specifically, the proposed method first trains a large source teacher model from the labeled source data, and exploits DomainMix augmentation and gradual adaptation to adapt the teacher to infer clean pseudo-labels of the unlabeled target dataset.

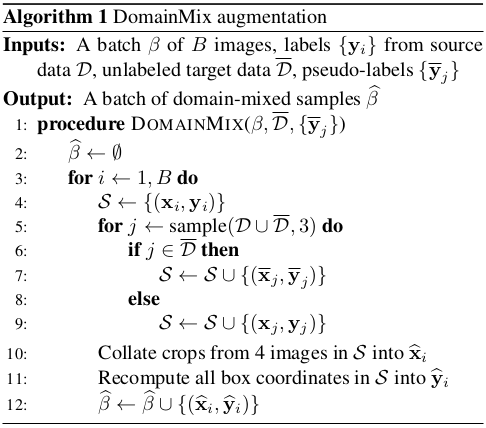

DomainMix

From the above figure, it can be seen that the DomainMix samples images from both source and target data domain and merge them into one. For object detection tasks, bounding boxes and the corresponding classes are given by the ground truth label or the initial noisy pseudo-label for the source and the target data, respectively. This DomainMix-ed samples can be beneficial since (i) it produces a diverse set of samples, (ii) it can help learn representation of both source and target domains, and (iii) it always contains ground-truth clean images which reduces the negative effect of false, noisy pseudo-labels. The source teacher model can adapt to become a target teacher model with the DomainMix augmentation.

Pseudocode of the proposed DomainMix

Pseudocode of the proposed DomainMix

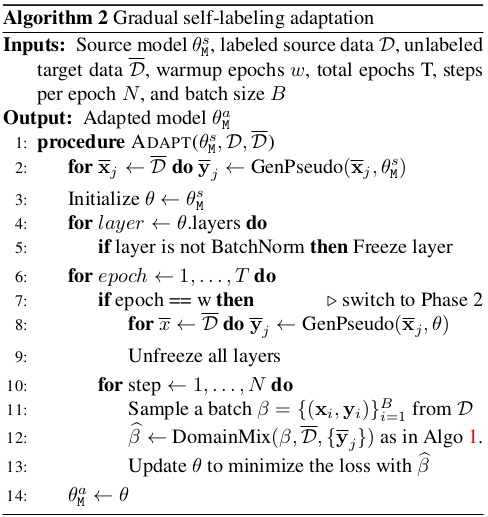

Gradual adaptation

Gradual adaptation refers to fine-tuning the model gradually. In particular, only the batch normalization layers are first trained in early stage of the training while other layer weights are frozen. In the later stage of the training, all parameters are fully trained.

Pseudocode of the proposed Gradual adaptation

Pseudocode of the proposed Gradual adaptation

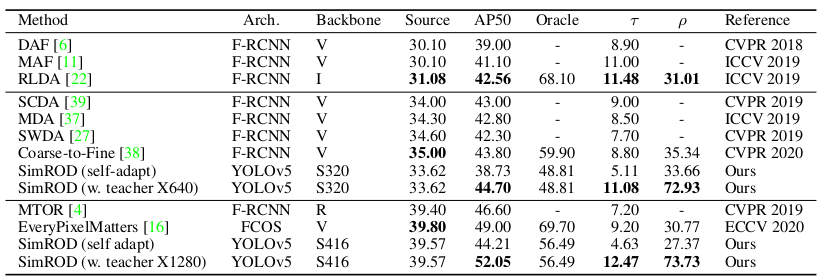

Demonstrate performance of the proposed method by experiments

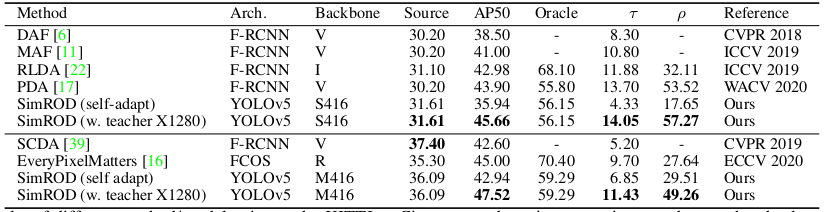

The domain adaptation from synthetic-to-real and cross-camera shifts are simulated by Sim10k to Cityscapes and KITTI to Cityscapes dataset, respectively. Performance of the proposed method is compared to a variety of baseline domain adaptation methods.

Comparative study result on synthetic-to-real domain adaptation

Comparative study result on synthetic-to-real domain adaptation

Comparative study result on cross-camera shift domain adaptation

Comparative study result on cross-camera shift domain adaptation

It can be seen that the proposed method demonstrates significant performance improvement over baseline ($\tau$ and $\rho$ are the absolute gain and the effective gain representing performance improvement.).

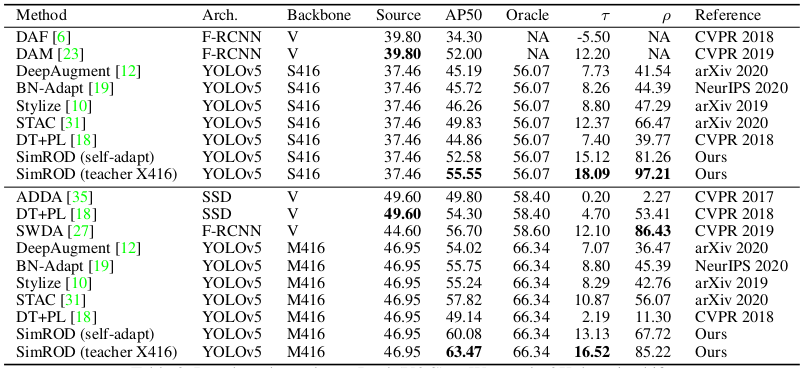

For cross-domain artistic domain adaptation, real-to-watercolor and real-to-comic shifts are simulated by VOC07 (real) to Clipart1k, Watercolor2k, and Comic2k datasets.

Comparative study result on real-to-watercolor domain adaptation

Comparative study result on real-to-watercolor domain adaptation

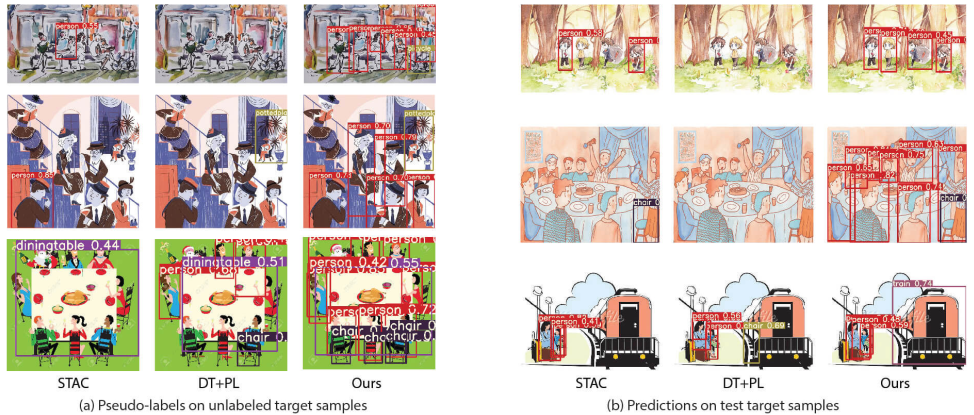

Qualitative performance of the proposed method. (a) Pseudo-label generating performance. (b) Prediction results on target domain samples

Qualitative performance of the proposed method. (a) Pseudo-label generating performance. (b) Prediction results on target domain samples

It can be seen that the cross-domain artistic domain adaptation is also improved by the proposed method.