Significance

Keypoints

- Propose an adversarial example generating algorithm for biomedical text data

- Demonstrate the effectiveness and naturalness of the generated adversarial example by experiments and human evaluation

Review

Background

Unlike the image data used for computer vision tasks, generating adversarial examples is quite challenging for text data. This difficulty comes from discrete nature of the input space, and the requirement that the generated adversarial example is semantically coherent with the original text. Early studies relying on generating text-level manipulation often resulted in unnatural, or grammatically incorrect examples. Some of the more recent works based on word-embeddings, such as TextFooler (TF) or BAE, tend to create more plausible examples that fit the overall context. However, further challenges arise when it comes to a more domain-specific adversarial example generation. For example, generating adversarial example for biomedical text data requires considering multi-word phrases (e.g. colorectal adenoma), variation of representing same medical entities (e.g. Type I Diabetes / Type One Diabetes), or spelling variations. The authors propose BBAEG (Biomedical BERT-based Adversarial Example Generation) to realize adversarial example generation for the biomedical domain text data.

For a more formal definition of the adversarial example, let data-label pair $(D,Y) = [(D_{1},y_{1}),…,(D_{n},y_{n})]$ and a classifying model $M:D\rightarrow Y$. Our goal is to generate an adversarial example $D_{adv}$ such that $M(D_{adv}) \neq y$. Also, the $D_{adv}$ should be semantically similar to the original text $D$ at a certain level $Sim(D, D_{adv}) \geq \alpha$, where $\alpha$ is the threshold, and be grammatically correct.

Keypoints

Propose an adversarial example generating algorithm for biomedical text data

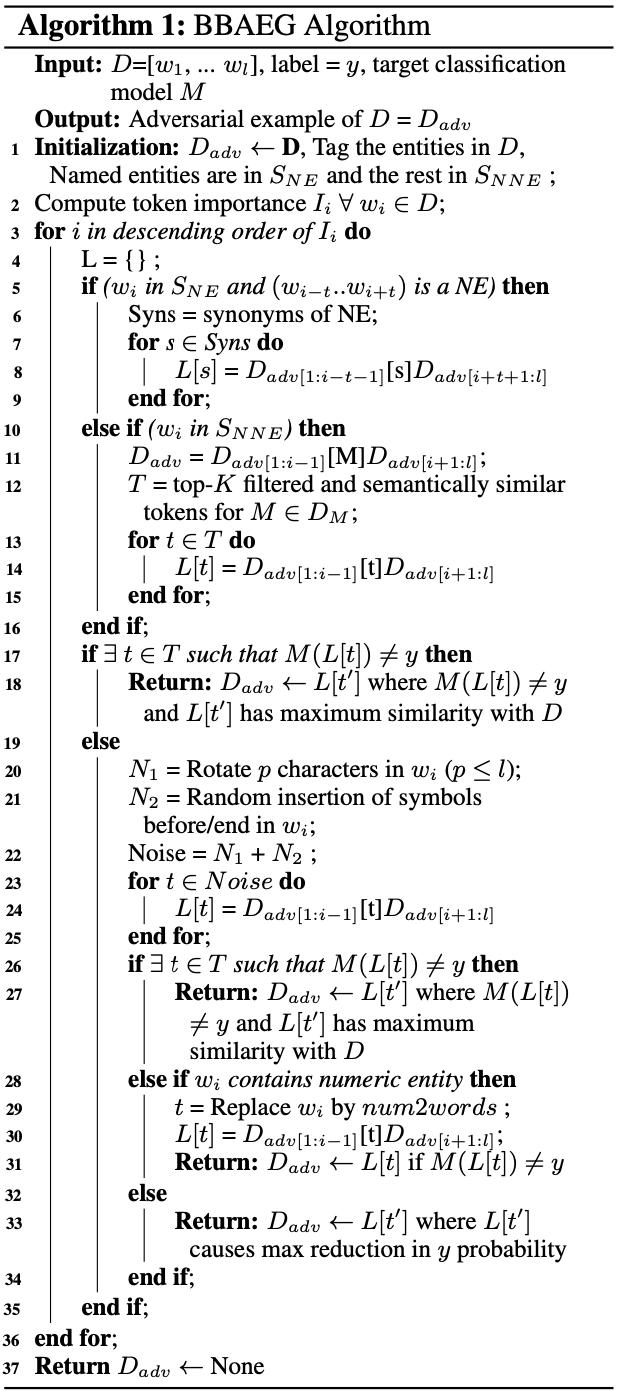

The BBAEG algorithm consists of following steps:

- Tag biomedical entities and prepare two classes NE (named entities) and non-NE (non-named entities).

- Rank importance of the words for perturbation

- Choose perturbation scheme

- Generate adversarial example

Tag biomedical entities

Biomedical named entities are extracted by applying sciSpacy with en-ner-bc5cdr-md to the text data sample $D{i}$. Entities are linked with Entity Linkers (DrugBank, MESH), and synonyms are obtained with DrugBank and pyMeshSim. Here, multi-word named entities are included. The words that were not included in the NE set are put in the non-NE set.

Rank importance of the words

Importance of each word token $I_{i}$ is estimated by deleting the corresponding word and computing the decrement in probability of predicting the correct label. The adversarial generation is performed to the text based on this rank of importance.

Choose perturbation scheme

Perturbation scheme is selected by a ordered sieve-based approach.

Sieve 1 Named entity tokens are replaced by synonyms using Ontology linking, while non-named entity tokens are replaced using BERT-MLM.

Sieve 2 Spelling noise, including random character rotation and symbol insertion, is introduced.

Sieve 3 Replace the numeric entity by expanding the numeric digit.

Generate adversarial example

The final adversarial example is obtained by selecting one of the three sieve-generated adversary, which is most similar to the original text.

The full process of BBAEG is demonstrated below.

The BBAEG algorithm

The BBAEG algorithm

Demonstrate the effectiveness and naturalness of the generated adversarial example by experiments and human evaluation

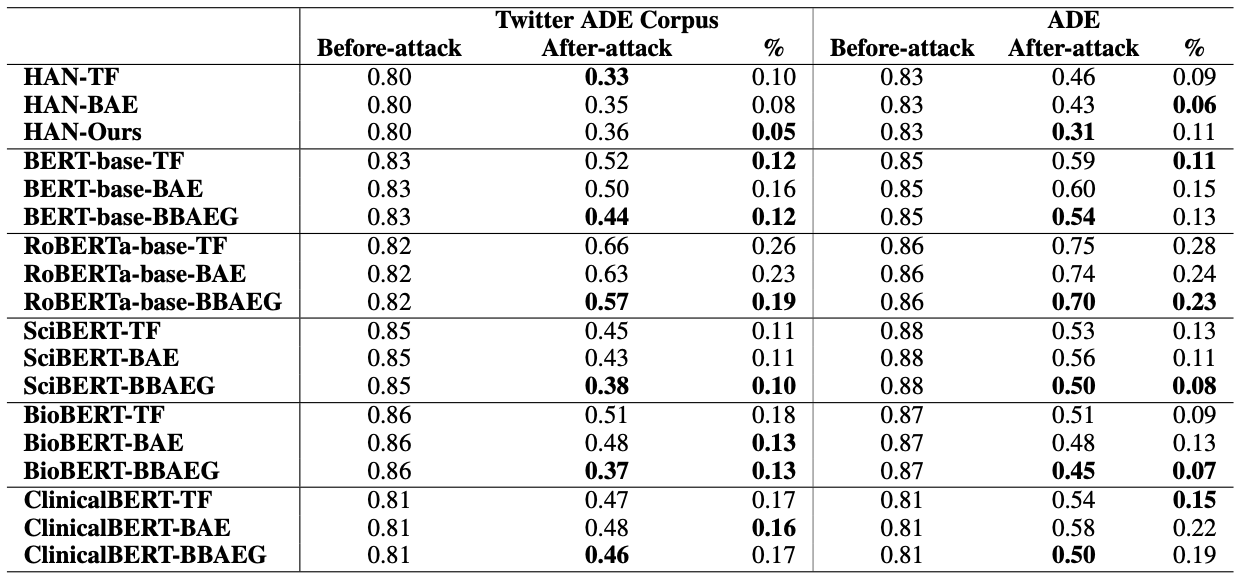

The effectiveness of BBAEG is demonstrated by the performance drop after adversarial attack, and perturbation ratio (\%) on two biomedical datasets (ADE, Twitter ADE).

Six classification models including Hierarchical Attention Model, BERT, RoBERTa, BioBERT, Clinical-BERT, SciBERT, were employed to serve as as $M$.

Baseline adversarial generation methods for comparison with BBAEG included the TF, and the BAE.

Effectiveness of the BBAEG

Effectiveness of the BBAEG

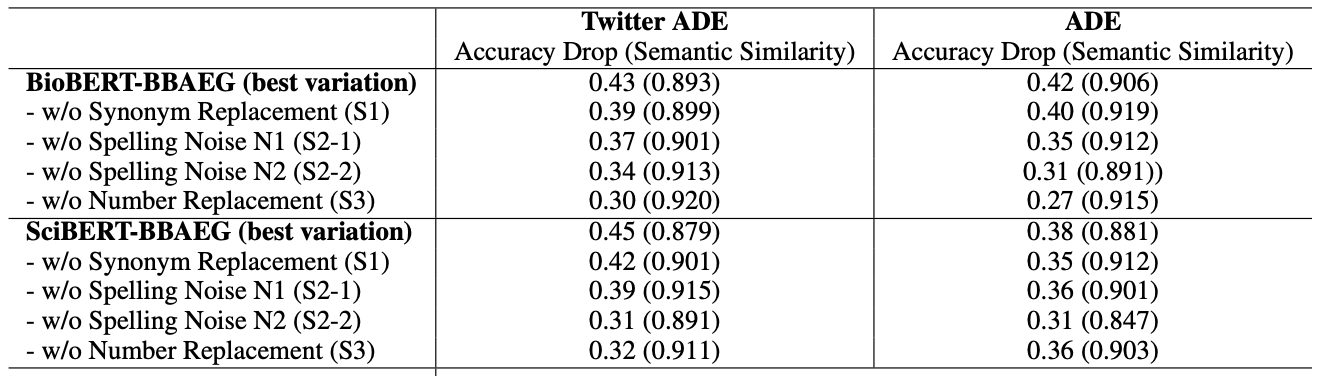

For self-comparison, ablation of each sieves for choosing the perturbation scheme was evaluated.

Ablation of perturbation scheme options

Ablation of perturbation scheme options

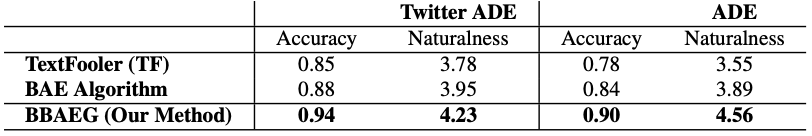

Human evaluation was performed regarding the naturalness and accuracy of generated adversarial instances.

Human evaluation of BBAEG

Human evaluation of BBAEG

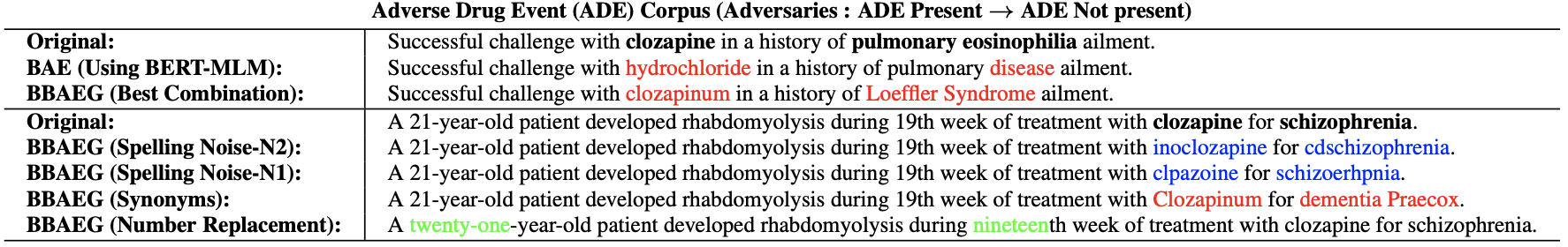

Some of the handpicked examples generated by BBAEG are also presented in the paper.

Adversarial examples generated with BBAEG

Adversarial examples generated with BBAEG